8

8

科学软件网专注提供科研软件。截止目前,共代理千余款,软件涵盖各个学科。除了软件,科学软件网还提供课程,包含34款软件,66门课程。热门软件有:spss,stata,gams,sas,minitab,matlab,mathematica,lingo,hydrus,gms,pscad,mplus,tableau,eviews,nvivo,gtap,sequncher,simca等等。

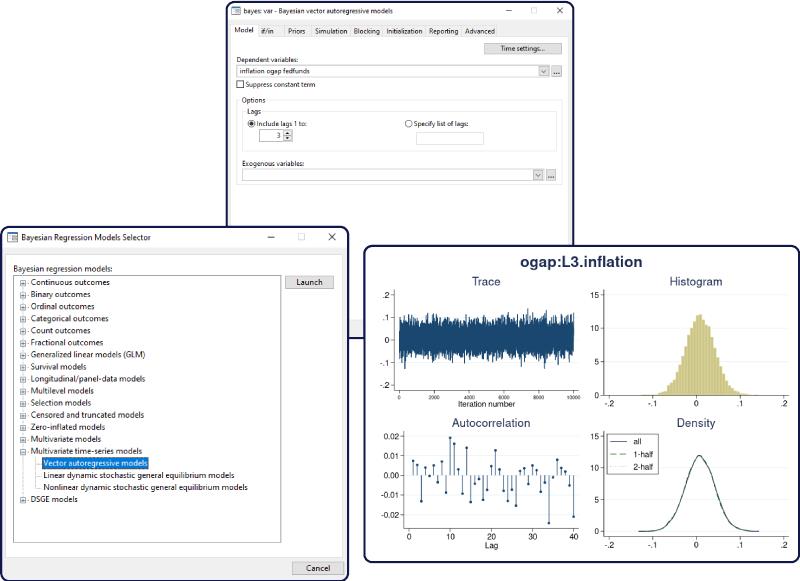

mean and posterior standard deviation, involve integration. If the integration cannot be performed

analytically to obtain a closed-form solution, sampling techniques such as Monte Carlo integration

and MCMC and numerical integration are commonly used.

Bayesian hypothesis testing can take two forms, which we refer to as interval-hypothesis testing

and model-hypothesis testing. In an interval-hypothesis testing, the probability that a parameter or

a set of parameters belongs to a particular interval or intervals is computed. In model hypothesis

testing, the probability of a Bayesian model of interest given the observed data is computed.

Model comparison is another common step of Bayesian analysis. The Bayesian framework provides

a systematic and consistent approach to model comparison using the notion of posterior odds and

related to them Bayes factors. See [BAYES] bayesstats ic for details.

Finally, prediction of some future unobserved data may also be of interest in Bayesian analysis.

The prediction of a new data point is performed conditional on the observed data using the so-called

posterior predictive distribution, which involves integrating out all parameters from the model with

respect to their posterior distribution. Again, Monte Carlo integration is often the only feasible option

for obtaining predictions. Prediction can also be helpful in estimating the goodness of fit of a model.

How to do Bayesian analysis

Bayesian analysis starts with the specification of a posterior model. The posterior model describes

the probability distribution of all model parameters conditional on the observed data and some prior

knowledge. The posterior distribution has two components: a likelihood, which includes information

about model parameters based on the observed data, and a prior, which includes prior information

(before observing the data) about model parameters. The likelihood and prior models are combined

using the Bayes rule to produce the posterior distribution

Bayesian inference provides a straightforward and more intuitive interpretation of the results in

terms of probabilities. For example, credible intervals are interpreted as intervals to which parameters

belong with a certain probability, unlike the less straightforward repeated-sampling interpretation of

the confidence intervals.

Bayesian models satisfy the likelihood principle (Berger and Wolpert 1988) that the information in

a sample is fully represented by the likelihood function. This principle requires that if the likelihood

function of one model is proportional to the likelihood function of another model, then inferences

from the two models should give the same results. Some researchers argue that frequentist methods

that depend on the experimental design may violate the likelihood principle.

以上两场讲座均免费,欢迎大家报名参加。

科学软件网不定期举办各类公益培训和讲座,让您有更多机会免费学习和熟悉软件。

http://turntech8843.b2b168.com

欢迎来到北京天演融智软件有限公司网站, 具体地址是北京市海淀区北京市海淀区上地东路35号院1号楼3层1-312-318、1-312-319,老板是赵亚君。

主要经营北京天演融智软件有限公司(科学软件网)主营产品PSCAD, CYME, SPSSPRO, Stata, Matlab,GAMS,Hydrus,GMS,Visual Modflow 等各学科软件,科学软件网有20多年的软件销售经验,提供专业销售和培训服务,还有更多的增值服务。目前,科学软件网提供的软件有数百种,软件涵盖的领域包括,经管,仿真,地球地理,生物化学,工程科学,排版及网络管理等各个学科。。

单位注册资金单位注册资金人民币 1000 - 5000 万元。

我们的产品优等,服务优质,您将会为选择我们而感到放心,我们将会为得到您认可而感到骄傲。